Abstract

We provide the first large dataset of human fixations on physical 3D objects presented in varying viewing conditions and made of different materials. Our experimental setup is carefully designed to allow for accurate calibration and measurement. We estimate a mapping from the pair of pupil positions to 3D coordinates in space and register the presented shape with the eye tracking setup. By modeling the fixated positions on 3D shapes as a probability distribution, we analysis the similarities among different conditions. The resulting data indicates that salient features depend on the viewing direction. Stable features across different viewing directions seem to be connected to semantically meaningful parts. We also show that it is possible to estimate the gaze density maps from view dependent data. The dataset provides the necessary ground truth data for computational models of human perception in 3D.

Download File "Tracking the Gaze on Objects in 3D: How do People Really Look at the Bunny?"

[pdf, 23.2 MB]

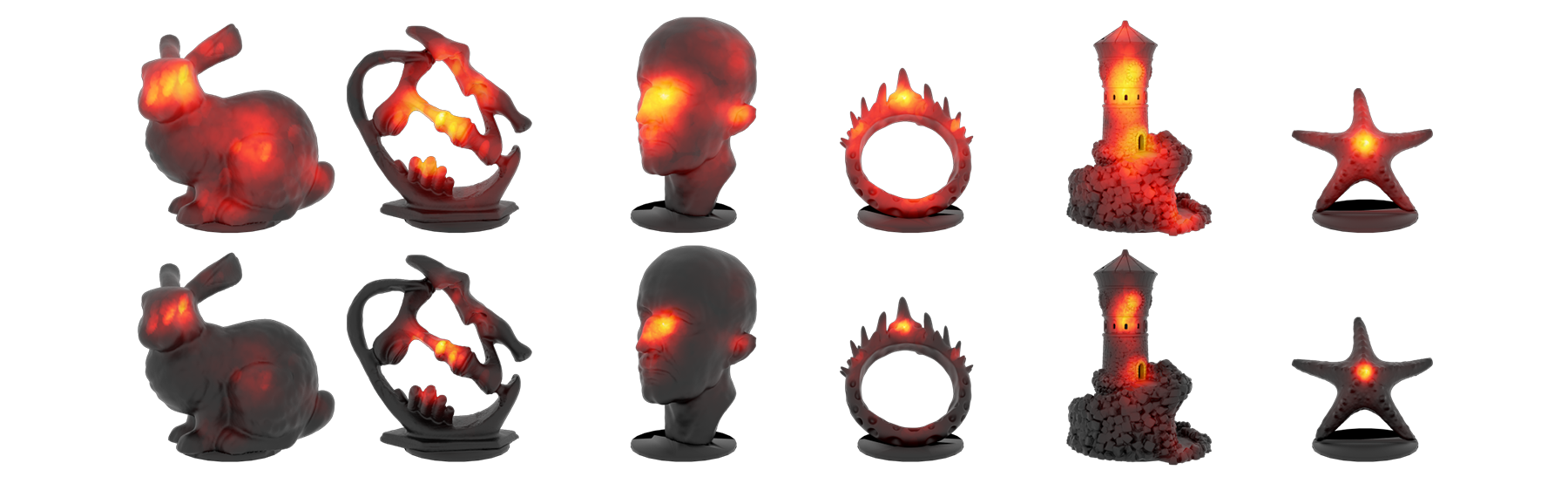

Dataset of human fixations on physical 3D objects

-

Gaze Viewer A WebGL based visualization tool allows exploring the fixation data collected from 70 observers.

3D models [91.3 MB zipped]

Viewing sequences [35 KB zipped], Raw samples [207 MB zipped] and Fixations [2.1 MB zipped] Raw data from original recording.

Gaze data per object [3.6 MB zipped] Estimated gaze position based on method described in the paper.

Fast Forward Clip

Acknowledgments

We would like to thank David Lindlbauer for assisting in experimental setup and implementing the WebGL viewer. We would also like to thank all participants of our experiment.