Abstract

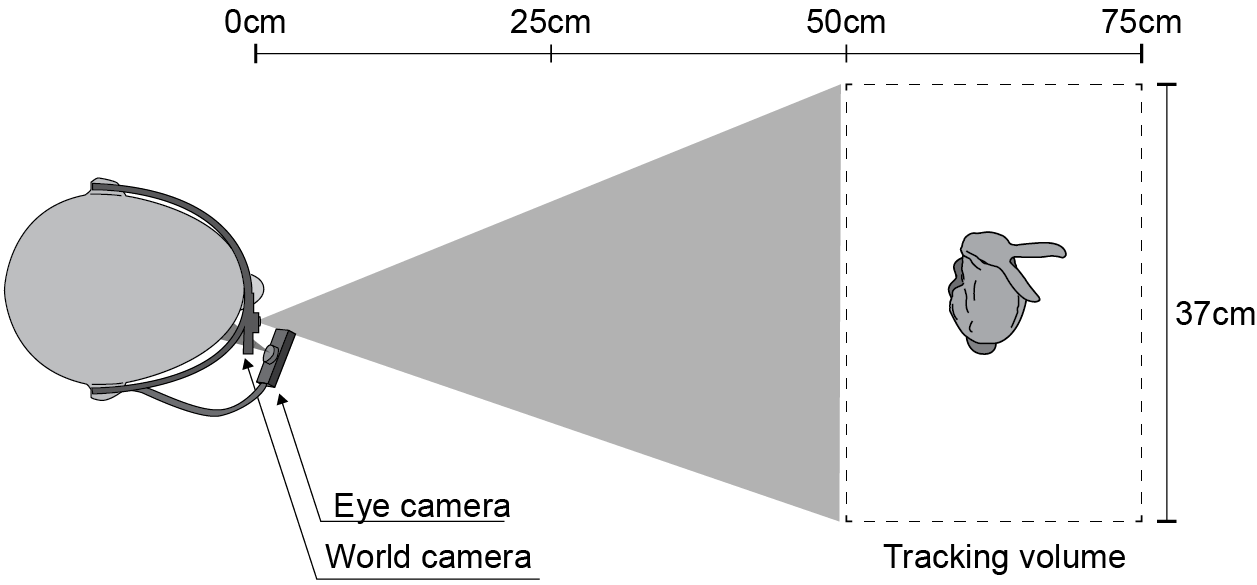

Many applications in visualization benefit from accurate knowledge of where a person is looking at. We present a system for accurately tracking gaze positions on a three dimensional object using a monocular head mounted eye tracker. We accomplish this by 1) using digital manufacturing to create stimuli with accurately known geometry, 2) embedding fiducial markers directly into the manufactured objects to reliably estimate the rigid transformation of the object, and, 3) using a perspective model to relate pupil positions to 3D locations. This combination enables the efficient and accurate computation of gaze position on an object from measured pupil positions. We validate the accuracy of our system experimentally, achieving an angular resolution of 0.8◦ and a 1.5% depth error using a simple calibration procedure with 11 points.

Download File "Accuracy of Monocular Gaze Tracking on 3D Geometry"

[PDF, 1.6 MB]

[Chapter, 2.6 MB]

Bibtex

@incollection{Wang2015,

author = {Xi Wang and David Lindlbauer and Christian Lessig and Marc Alexa},

title = {Accuracy of Monocular Gaze Tracking on 3D Geometry},

booktitle = {Workshop on Eye Tracking and Visualization (ETVIS) co-located with IEEE VIS},

date = {2015-10-25}

}

Acknowledgments

This work has been partially supported by the ERC through grant ERC- 2010-StG 259550 (XSHAPE). We thank Felix Haase for his valuable support in performing the experiments and Marianne Maertens for discussions on the experimental setup.