Abstract

When retrieving image from memory, humans usually move their eyes spontaneously as if the image were in front of them. Such eye movements correlate strongly with the spatial layout of the recalled image content and function as memory cues facilitating the retrieval procedure. However, how close the correlation is between imagery eye movements and the eye movements while looking at the original image is unclear so far. In this work we first quantify the similarity of eye movements between recalling an image and encoding the same image, followed by the investigation on whether comparing such pairs of eye movements can be used for computational image retrieval. Our results show that computational image retrieval based on eye movements during spontaneous imagery is feasible. Furthermore, we show that such a retrieval approach can be generalized to unseen images.

Download File "Computational discrimination between natural images based on gaze during mental imagery"

[PDF]

Dataset

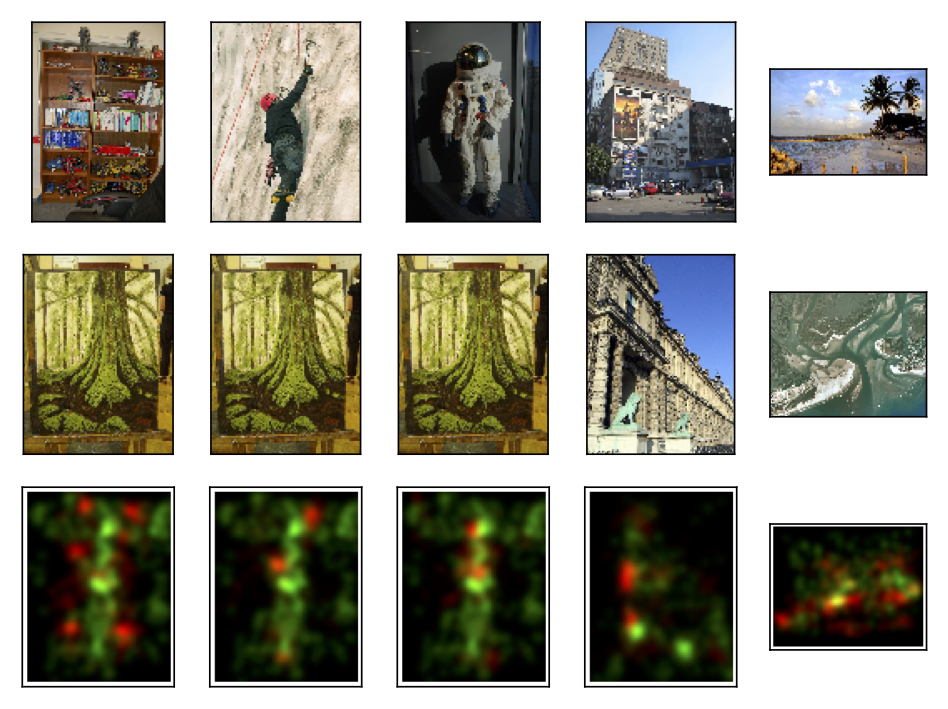

Image stimuli [12 MB zipped]

Raw samples [158 MB zipped]

Eye movement events [1.6 MB zipped]

Acknowledgments

We would like to thank all participants for joining our experiment. Furthermore, We thank Marianne Maertens for valuable advice, and David Lindlbauer for help performing the experiment. This work has been partially supported by the ERC through Grant ERC-2010-StG 259550 (XSHAPE). Open access funding provided by Projekt DEAL.